When you google 'reset rabbitmq user password' there's a lot of helpful answers around using some CLI tools like rabbitmqctl which initially I thought this was something that you could install locally and point at a cluster. But alas, after a bit of reading you find out that it is installed on the nodes themselves, and not something you can install with brew and use locally. So... how do you use it when you have rabbit installed in a remote k8s cluster

Could not copy properties/launchSettings.json with docker and .Net Could not copy properties/launchSettings.json with docker and .Net (MSB3026, MSB3027, MSB3021)

Out of the blue, some of our docker builds stopped working with an error that it could not copy launchsettings.json when doing a build in our .net7.0 containers. Could not copy "/src/api/properties/launchSettings.json" to "bin/Release/net7.0/properties/launchSettings.json". Beginning retry 1 in 1000ms. Could not find a part of the path '/src/api/bin/Release/net7.0/properties/launchSettings.json' The only thing that has changed was... Continue Reading →

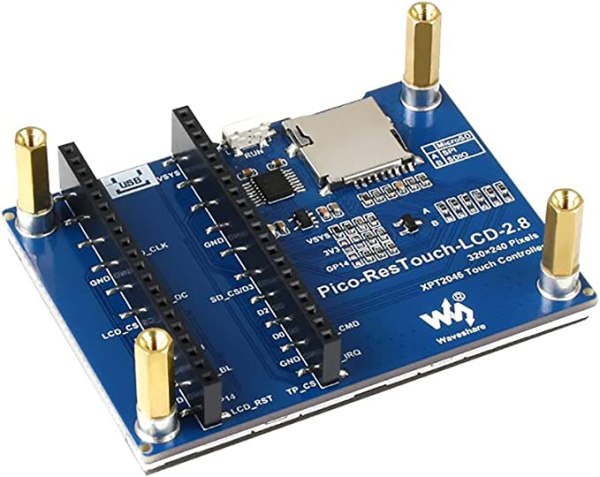

Using the WaveShare Pico ResTouch LCD 2.8 Screen with CircuitPython

Another day, another random display from WaveShare that has pretty poor documentation, and me trying to get it working with CircuitPython... I must be CRAZY NB: As always, I waffle on a bit here... click here to skip to the tl;dr technical details I do feel like I am losing the plot with these sometimes... Continue Reading →

Building a K3S Cluster using Raspberry Pis

If you are going to build a Pi Cluster using K3S, the easiest way to get up and running is to use the pre-baked setup script from k3s.io However, as with all things linux/pi... there's a few steps you might want to do first. Initially flash your pis all with the Raspberry Pi Imager using... Continue Reading →

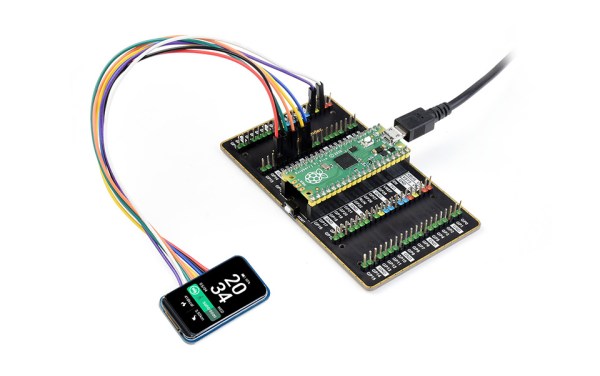

Using the WaveShare 1.47″ Curved SPI Display with a Raspberry Pi Pico and CircuitPython

I recently purchased (read: impulse bought) two of these Waveshare 1.47" SPI Displays, with curved corners. Looks pretty great, and I thought it might be interesting to use in a project that I am working on using a Raspberry Pi Pico W, they have a picture of it wired up to a pico on the... Continue Reading →

Encrypt and Decrypting strings (Easily) in CircuitPython

Look, this is nothing new, I haven't invented anything clever, but I couldn't find an easy copy and paste solution to take a string (of any length), encrypt it with a password... and then decrypt it later on with Circuit Python. I should start by saying, I'm not a python expert, I'm barely even a... Continue Reading →

Solving “Service Bus account connection string ‘ServiceBus’ does not exist. Make sure that it is a defined App Setting” when building Azure WebJobs

As always, this is a rambling rant about how/why I came across this solution. If you want to skip to the copy/paste, click here. I used to love webjobs... they were great. I also used to love Azure Service Bus... it offered so many features, and Service Bus Explorer is fantastic for managing the queues... Continue Reading →

Using –build-args with docker in Azure DevOps Pipelines

This should be simple right? Even with the new yaml pipeline editor, you should be able to do the old 'build and push' and fire a few arguments in. Nope. Doesn't work. Here's how you can do it..

VSCode .devcontainer using dotnet5.0/.Net 5

If, like me, you have recently discovered the power of the .devcontainer functionality of VS Code and it's remote debugging, you will maybe be dismayed that there isn't support for net5.0/.Net 5 yet. However, as we are developing a new API at the minute, that's not yet released, we upgraded it to net5 to see... Continue Reading →

Azure Redis Cache – “No endpoints specified” Error (In dotnet core)

We use a redis server for caching some basic requests in our web apps. Recently we were seeing redis timeouts during testing... there was no server load, there were about 7 requests being processed a minute, and our redis server was reporting roughly 1% capacity for usage.... strange. We were using the package Microsoft.Extensions.Caching.Redis Version... Continue Reading →